Google Analytics is a powerful tool that’s unprecedented in its ability to measure your website’s performance. The data it gathers is invaluable to you as a marketer. They can give you a clear view of what decisions you need to make to benefit your brand. Data, however, are just numbers and graphs. On their own, they cannot tell a story. It’s your job as a marketer to deduce that story through sound and unbiased analysis and not fall for Google Analytics myths.

If Google Analytics terms and data confuse you more than they enlighten you, this article will help you understand four Google Analytics and SEO-related myths you need to avoid.

How do I use Google Analytics?

Business owners use Google Analytics (GA) to see what they’re doing right, in terms of getting quality traffic to their sites. If you’re a business owner hoping to expand your presence in online spheres, you’ll need analytics to measure your success.

With the use of metrics, Google Analytics tracks who visits your site, how long they stay, what device they’re using, and what link brought them there. With these data, you can discover how to improve your online marketing and SEO strategies.

Google Analytics basics

At first, it may seem like Google Analytics is serving you raw data that are too complicated to digest. Learning to speak the analytics language, though, it is easier than you think. Below are some basic terms to help you better understand the data reported by Google Analytics:

Pageviews

Pageviews are the total number of times a page on your site that users have viewed. This includes instances in which users refresh the page or when they jump to another page and promptly go back to the page they had just left. This underlines what pages are most popular.

Visits/Sessions

Sessions are measured by how much time users spend on your website, regardless if they spend it navigating only one or multiple pages. Sessions are limited to a 30-minute window. This means that if users stay on the site for 30 minutes but remain inactive and non-interactive with the page throughout, the session ends. If they leave the site and go back within 30 minutes, though, it gets counted as a session.

Average session duration refers to the average time users spent on your site. Pages per session, on the other hand, is the average number of pages that users view on your site within a single session.

Time on Page

This refers to the average time users spend on a page on your site. This can help you determine which pages users typically check out longer. This starts the second a pageview is counted until the subsequent pageview ends it.

Traffic

Traffic refers to the number of people accessing your website. This comes from a traffic source or any place where users come from before they are led to your pages.

Traffic is classified into direct and referral. Direct traffic comes from pageviews triggered by specifically typing the whole URL or when a user is given a URL directly without searching for it. Referral traffic is directed from links on other sites, like search results or social media.

Unique Pageviews

Unique pageviews are reported when your page is viewed once by users in a single session. These don’t count the times users navigated back to that page in the same session. For example, a user navigates the whole site in one session and navigates back to the original page three times; the Unique Pageview count is still at one, and not three.

Unique Visitors

When a user visits your site for the first time, a unique visitor and a new visit for the website is counted. Google Analytics uses cookies to determine this. If the same user comes back to the site on the same browser and device, it’s only counted as a new visit. But if that user deletes their cookies or accesses the site through a different browser or device, they may be falsely added as a unique visitor.

Hits

Hits are interactions or requests made to a site. This includes page views, events, and transactions. A group of hits is measured as a session, used to determine a user’s engagement with the website.

Clicks

Clicks are measured by the number of clicks you get from search engine results. Click-through rate (CTR) is the total amount of clicks divided by impressions or times you are part of the user’s search results. If CTR is dropping, consider writing titles and meta descriptions that capture your users’ attention better.

Events

Events are actions users take on a particular site. This includes clicking buttons to see other pages or download files. You are looking at what kind of content encourages users to interact with the page, thereby triggering an event.

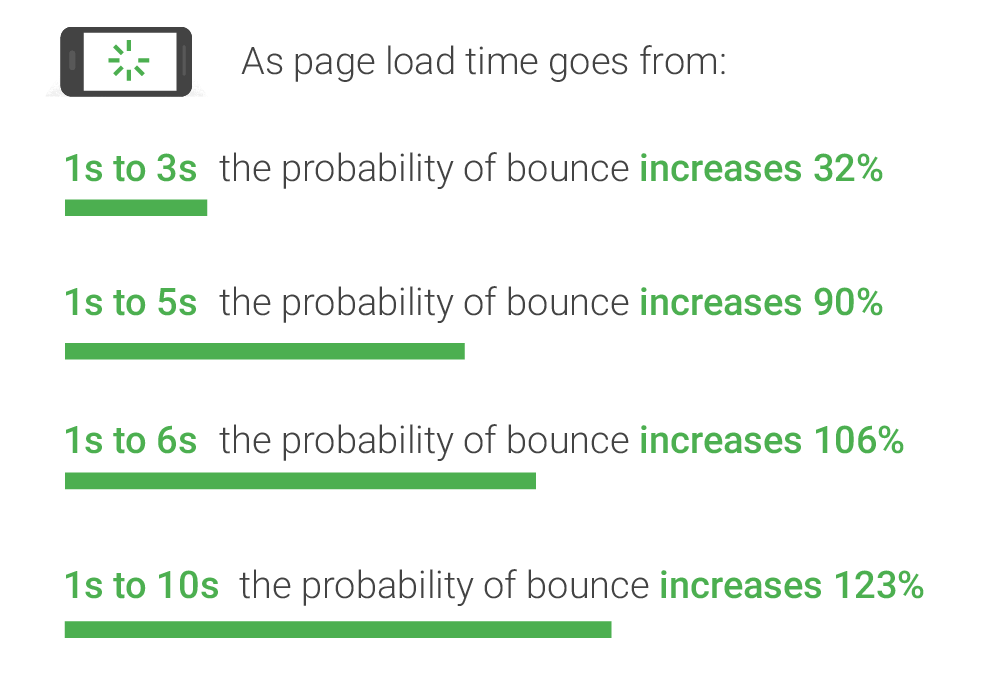

Bounce rate

Bounce rate refers to users’ single-page sessions wherein they click on a page and exits quickly without interacting with a single element on the page. A high bounce rate can mean either that a user has swiftly found what they were looking for or that they did not think the content on the page was interesting enough to stay longer and engage.

Goals

You can input goals in your Google Analytics account to track user interactions on your site. These interactions include submitting a report, subscribing to your newsletter, or downloading files. If the user performs an event that you’ve identified as a goal, Analytics counts this as a conversion.

Four common Google Analytics myths debunked

Now that you have an overview of Google Analytics terms, below are five common misconceptions surrounding those terms and how to avoid these as a marketer.

1. The more traffic that goes to your site, the better

The myth

Generally, you’d want more people to visit your site. These huge amounts of visits, though, won’t matter if they don’t turn into conversions. Even if thousands of people flock to your webpages each day, if they don’t take the desired actions your SEO campaign is aiming for, these visits won’t provide any benefit for your site.

The truth

A good SEO strategy is built upon making sure that once you’ve garnered a pageview, the quality of your content drives the user to the desired action such as subscribing to a newsletter, for example.

Keyword research can help make sure that you use the right terms to get you a higher ranking on SERPs. The material on your site, however, is also crucial in satisfying your users’ queries, enough to get a conversion.

2. Users need to spend more time on webpages

The myth

Users spending a few quick seconds on your page is not entirely bad. This may mean that these users are looking for quick, precise answers. Quality SEO delivers this to them through well-placed keywords and concise content. Hence, if they quickly get the answers they need, they tend to leave the site immediately.

The truth

Quality SEO content ensures that your material is written in such a way that it invites users to learn more about the subject, which can be seen when they are led to another page on your site. This leads them one step closer to taking the desired action on your site.

3. The amount of unique visitors is an accurate metric to measure audience traffic

The myth

The upsurge of unique visitors on your page doesn’t necessarily mean that the amount of your audience is blowing up. Unique visitors are measured by cookies used by Google to determine if it’s a user’s first time on a site. The same user accessing the same page through a different browser or a browser whose cookies have been cleared is counted as a unique visitor too.

The truth

If you’re looking to study your audience, it’s not enough to look at how many of them go to your page. You can refer to the Audience > Demographics tab and see who are navigating your site and from what marketing links they were directed from. With this information, you can determine what types of content gather the most traffic and from what avenues this traffic comes from such as SERPs or social media posts, for example.

4. Traffic reports are enough to tell if your campaign is successful

The myth

Looking at traffic reports alone is not enough to determine whether your SEO campaign is successful, or that your keyword research paid off. Although at first, it seems as though heavy traffic signals an effective online marketing strategy, it only counts the quantitative aspect of your campaign and dismisses the qualitative side.

The truth

Maximize all the reports on GA. All these are correlated with how your campaign is going. Reports are valuable in comprehensively addressing issues instead of nitpicking on a single aspect of a campaign because, for instance, a report suggests it’s not doing its job.

These points will help you clear the air when it comes to Google Analytics and help you correctly derive insights.

The post Four common Google Analytics myths busted appeared first on Search Engine Watch.

from SEO – Search Engine Watch https://ift.tt/31eoo6s

via IFTTT